Virtual & Augmented Reality

Having first captured the public imagination over twenty years ago, recent improvements in computational processing speed, as well as consumer-fueled competition, have led to the conception of powerful virtual reality systems (VR). As highly immersive consumer-level simulation devices, VR systems are an attractive tool for a variety of research areas, such as human-to-machine interaction, fundamental perception and movement studies and neuro-rehabilitation. The portable and interactive nature of VR devices, as well as the ability to engage both visual and auditory perception in a naturalistic manner, enable us to design experiments that more closely resemble everyday activity. As this technology is now gaining considerable traction in the neuroscience community, we aim to be at the forefront of research that bridges the gap between virtual reality, neurobiological data and real-world applications.

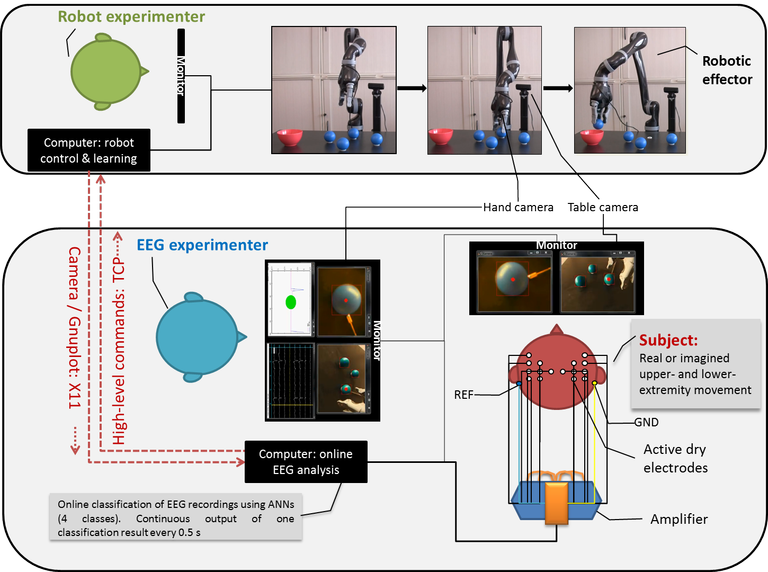

Schematic representation of experimental setup

Subjects and robot are located several kilometres apart and connected through the internet by TCP/IP and X-forwarding. EEG Subjects see the online video stream of hand and table cameras of the robot arm. The EEG experimenter sees both video streams, the sent data and the ongoing EEG recordings. The robot experimenter sees both video streams, the received data and the flow of robotic control. GND: ground; REF: reference; ANN: artificial neural network. Reproduced from Lampe et al. 2014

The term Augmented Reality (AR) describes interfaces that provide additional information or increased interactivity with one’s surroundings, for example by projecting a computer-generated head up display over life camera footage. We developed a high- level Brain-Machine-Interface (BMI) that allows subjects to remotely control a robotic arm to manipulate objects using motor imagery decoding. Through object recognition, the Augmented reality environment provides the subject with the means to select and consequently interact with target objects in a distant location through internet connectivity.

Publications:

- Lampe, Thomas, Lukas D.J. Fiederer, Martin Voelker, Alexander Knorr, Martin Riedmiller, and Tonio Ball. “A Brain-Computer Interface for High-Level Remote Control of an Autonomous, Reinforcement-Learning-Based Robotic System for Reaching and Grasping.” In Proceedings of the 19th International Conference on Intelligent User Interfaces, 83–88. IUI ’14. New York, NY, USA: ACM, 2014. doi:10.1145/2557500.2557533.

- Fiederer, L. D. J., T. Lampe, M. Voelker, L. Spinello, W. Burgard, M. Riedmiller, and T. Ball. “An Augmented Reality Approach for BCI Control of Intelligent Autonomous Robots.” In BIOMEDICAL ENGINEERING-BIOMEDIZINISCHE TECHNIK, 59:S1097–S1097, 2014. http://scholar.google.com/scholar?cluster=8912457567397758677&hl=en&oi=scholarr.